-

The Future of Automated Patch Testing

Over two years of development has lead to testing.drupal.org becoming a reality. The testbed has been active for almost two months with virtually no issues related to the automated testing framework. That is not to say the bot has not needed to be disabled, but instead that the issues were unrelated to the automated testing framework itself.

The stability of the framework has lead to the addition of over 6 testing servers to the network, with more in the works. Increasing the number of testing servers also means an increased load required to manage the testing network. A fair amount of labor is needed to keep the testing system running smoothly and to watch for Drupal 7 core bug introductions.

Having the testing framework in place has saved patch reviewers, specifically the Drupal 7 core maintainers: Dries and webchick, countless hours that would otherwise have been spent running tests. The automated framework also ensure that tests are run against every patch before it is committed. Ensuring tests are always run has lead to a relatively stable Drupal 7 core that receives updates frequently.

Based on the overwhelming positive feedback and personal evaluation of the framework a number of improvements have been made since its deployment. The enhancements have, of course, lead to further ideas which will make the automated testing system much more powerful than it already is.

Server management

A number of additions have been made to make it easier to manage the testing fleet. The major issue that remains is that of confirming that a testing slave returns the proper results. The process currently requires manual testing and confirmation of the results. The process could be streamlined by adding a facility that would send a number of patches with known results to the testing slave in question. The results returned would then be compared to the expected results.

The system could be used at multiple stages during the testing process. Initially when a testing slave is added to the network it would be automatically confirmed or denied. Once confirmed the server would begin receiving patches for testing. Periodically the server would then be re-tested to ensure that it is still functioning. If not the system would automatically disable the server and notify the related server administrator.

Having this sort of functionality opens up some exciting possibilities as described bellow.

Public server queue

Once the automated server management framework is in place the process of adding servers to the fleet could be exposed to the public. A server donor could create an account on testing.drupal.org, enter their server information, and check back for the results of the automated testing. If errors occur the server administrator would be given common solutions in addition to the debugging facilities already provided by the system.

If the server passes inspection an administrator of testing.drupal.org would be notified. The administrator would then confirm the server donation and add the server to the fleet. Once in the fleet the server would be tested regularly like all the other servers. If the donor wishes to remove their server from the fleet they would request removal of their server on testing.drupal.org. The framework would let any tests finish running and then remove the server automatically.

A system of this kind would provide a powerful and easy way to increase the testing fleet with minimal burden to the testing.drupal.org administrators. Having a larger fleet has a number of benefits that will be discussed further.

Automated core error detection

Automatically testing an un-patched Drupal HEAD checkout after each commit and confirming that all tests pass would ensure that any human mistakes made during the commit process do not cause false test results. In addition to testing the core itself the detection algorithm would also disable the testing framework if drupal.org is unavailable. Currently when drupal.org goes down the testing framework continues to run which causes errors due to the patches not being accessible. Having this sort of system in place would be a great time saver for administrators and ensure that the results are always accurate.

There is currently code in place for this, but it needs expanding and testing.

Multiple database testing

Drupal 7 currently supports three databases and there are plans to support more. Testing patches on each of the databases is crucial to ensure that no database is neglected. Creating such as system would require a few minimal changes to the testing framework to store results related to a particular database, send a patch to be tested on each particular database, and display the results in a logical manor on drupal.org.

Patch reviewing environment

In addition to performing patch testing the framework could also be used to lower the barrier required to review a patch. Instead of having to apply a patch to a local development environment a reviewer would simply be required to press a button on testing.drupal.org after which he/she would be logged into an automatically setup environment with the patch applied.

This sort of system would save reviewers time and would make it much easier for non-developers to review patches, especially for usability issues.

Code coverage reports

Drupal 7 strives to have full test coverage. What that means is that the tests check almost every part of the Drupal core to ensure that every works as intended. It is rather difficult to gage the degree to which core is covered without the use of a code coverage reporting utility. Setting up a utility of that kind is no small task and getting results requires large amounts of CPU time.

The testing framework could be extended to automatically provide code coverage reports on a nightly basis. The reports can then be used, as they have been already, to come up with a plan for writing additional tests to fill the gaps.

Performance ranking

Since the tests are very CPU intensive having a good idea of the performance of a particular testing slave would be useful for ordering which servers are sent patches first. Ensuring that patches are always sent the fastest available testing server will ensure the quickest turn-around of results. The testing framework could automatically collect performance data and use an average to rank the testing server.

Standard VM

Creating a standard virtual machine would have a number of benefits: 1) eliminate most configuration issues, 2) provide consistent results, 3) make the processing of setting up a testing slave easier, and 4) make it possible for one testing server to test patches on different databases. Several virtual machines are currently in the works, but a standard one has yet to be agreed upon.

Benefits

Drupal is somewhat unique in having an automated system like the one in place. The system has already proven to be a beneficial tool and with the addition of these enhancements it will become a more integral part of Drupal development and quality assurance. Maintaining the system will be much easier, reviewing core patches will be simpler, and the testing fleet can be increased in size much more easily.

With a larger testing fleet the testing framework can be expanded to test contributed modules. In addition the framework can be modified to support testing of Drupal 6 and thus enable it to test the large number of contributed modules that have tests written. Having such a powerful tool available to contrib developers will hopefully motivate more developers to write tests for their modules and in doing so increase the stability of contributed code base.

The automated testing framework is just beginning its life-cycle and has already proven its worth, with enhancements like the ones discussed above the framework can continue to provide new tools to the Drupal community.

-

Keep core clean: issues will be marked CNW by testing framework

The final updates to the testing framework have been placed on drupal.org. The updates will allow issues to be marked as code needs work when the latest eligible patch fails testing.

After a bit of work fixing the latest bugs in core in order to make all tests pass, the testing system has been reset and should now be reporting back to drupal.org again, but this time marking issues as CNW!

Current Drupal 7 issue queue status:

267 Pending bugs (D7) 241 Critical issues (D7) 1116 Patch queue (D7) 388 Patches to review (D7)I hope to see some changes.

On the other side this means if a bug is committed to core that causes the tests to fail all patches tested will be marked as CNW.

Make sure you wait for testing results on a patch before you mark it as ready to be committed.

-

Testing results on drupal.org!

Today testing.drupal.org began sending results back to drupal.org! The results are displayed under the file they relate to. The reporting will not trigger a change in issue status if the testing fails, as is the future plan. That will be turned on when the automated testing framework has proven to be stable.

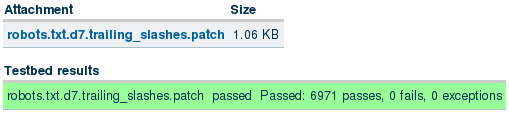

The following is an example of what a pass result look like on drupal.org.

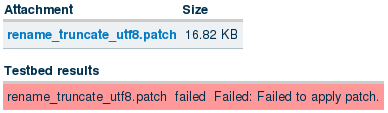

The following is an example of what a fail result look like on drupal.org.

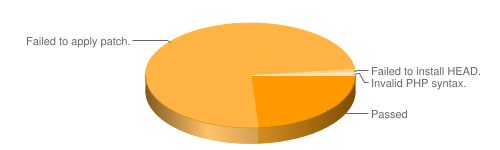

Testing.drupal.org also provides detailed stats on patch results and status. An example of patch result stats is as follows.

Early success

Before the actual reporting was turned on the automated testing framework discovered two core issues. One was a partial commit that caused a PHP syntax error. The other was a bug with the installer. I hope to see the results make patch reviewing much faster and easier.

Your help

-

Testing.drupal.org is running!

After several days of battling the server and the enormous XML file generated by exporting the assertion data a solution was found. Instead of dumping all the results the framework only stores summary results. Just enough to tell you where the tests failed, but at the same time preserving the server.

Intended workflow

After a developer notices that there patch unexpectedly failed they will run the failed tests on their dev environment to find out what went wrong and work from there. This keeps the debugging work in the developers environment instead of on testing.drupal.org.

Patch criteria

One of the remaining issues pertains to what patches should be tested. The following represents what I believe we need from reading the issues and pondering. Some of these are already implemented, but listed for clarity.

- File must have patch or diff extension.

- Issue is for Drupal 7.

- Issue is marked as patch (code needs review) or patch (reviewed & tested by the community).

- Issue is not in documentation component.

- Do not test flag. Something in filename like D6_*.patch if in Drupal 7 issue, like a port. And possible NOTEST_*.patch or a component? This is the part that needs to be figured out.

If the patch meets the criteria then it will be eligible to be tested and at some point sent for testing.

Patch sending event

Patches need to be sent out frequently to ensure they are tested as quickly as possible. To do this drupal.org will be setup to send files to be tested every 5 minutes.

In addition once daily, every other day, or some similar pattern the entire issue queue should be searched for patches that match the above mentioned criteria, regardless if they have already been tested, and send them all for testing. This will ensure that “patch rot” or patches that no longer apply after other changes are committed are automatically detected.

Reporting back

The most exciting and useful feature of this entire endeavor is being saved until last. Once we have all the things mentioned above in place and testing.drupal.org running smoothly then reporting back will be turned on.

What reporting will do is after a patch has been tested the results will be sent back to drupal.org. Code running on drupal.org will then check the issue related to the file results sent back to see if there is a newer patch. If there is the results will be ignored. If not then the issue status may be changed to reflect the result of testing.

In addition I envision having the results for each file listed on their comment in a summarized form with a link to the full results on testing.drupal.org.

Roadmap

In order to make this a reality a standard needs to be decided on for the patch criteria, either confirming/appending to the above, or something new. Once that is complete then the code needs to be written and placed on drupal.org.

After that is complete testing.drupal.org should be monitored to ensure it works properly and consistently. In the meantime work will begin on the code related to reporting back to drupal.org.

Community help

It would be very much appreciated if the community would check the results of testing on their patches to Drupal 7 core. This can be done be visiting the following page.

http://testing.drupal.org/pifr/node/1/[node_id]Make sure that the testing results work and that your patch is tested if it meets the criteria. Please check even if your patch should not be tested, to ensure that it isn’t.

Thanks for your help and post to the queue if you find problems.

-

Final pieces to automated patch testing

I recently had a talk with hunmonk in IRC about what needs to be done to get drupal.org to start sending patches to the new automated testing framework. After almost an hour of discussion and thought we came to the following agreement. (summarized from IRC log)

Initial plan

- PIFT which is already in place on drupal.org will continue to be used to scan the issue queue and send patches to testing.drupal.org. (May need criteria updated.)

- PIFT client will then be ported to Drupal 6.x and benefit from the addition of a hook as apposed to the current mechanism.

- PIFR will be updated to implement the new PIFT hook in the ported Drupal 6.x client.

Future plan

- Reporting back to drupal.org will be discussed a bit further as there are several ways and opinions on how it should work both technically and from a workflow standpoint.

- PIFR will send summary of testing results back to drupal.org through PIFT.

- PIFT will display the results on drupal.org.

Possible future

- PIFT may be further abstracted to allow for general distributed information sending between Drupal sites.

- Reporting logic may be moved out of PIFT and into another module.

Nomenclature:

- PIFT - Project issue file testing platform

- PIFR - Project issue file review (code for new automated testing framework)